What is MakeHub?

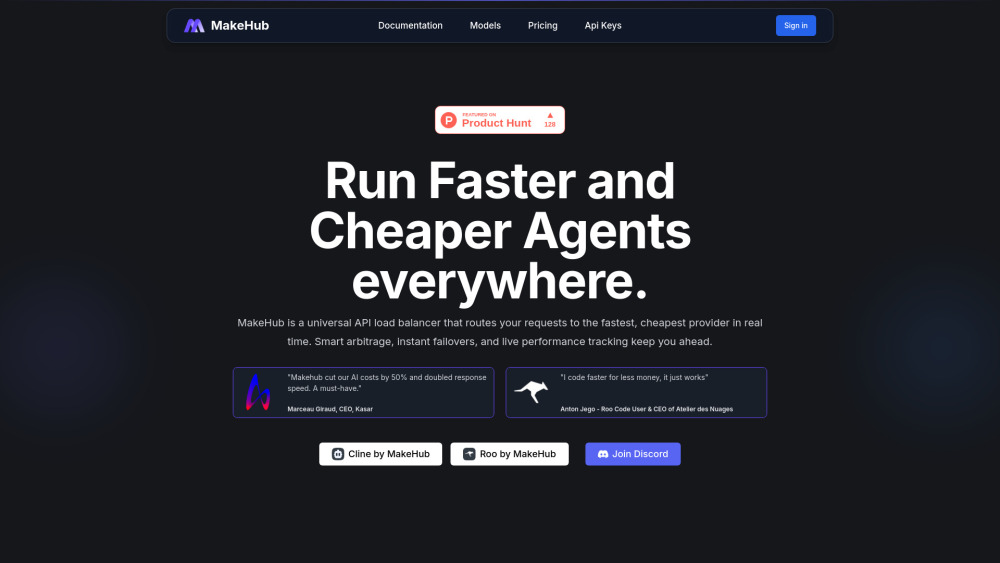

MakeHub is an intelligent AI routing platform that optimizes how your applications interact with large language models. By acting as a universal gateway, it automatically directs API calls for models like GPT-4, Claude, and Llama to the most efficient provider at any given moment—balancing speed, cost, and reliability. With real-time performance monitoring across dozens of AI providers, MakeHub delivers seamless access through a single OpenAI-compatible interface, ensuring your AI workflows run faster, cheaper, and with unmatched uptime.

How to use MakeHub?

Using MakeHub is simple: integrate once using its unified API endpoint, then specify your preferred model in each request. Behind the scenes, MakeHub evaluates all available providers in real time—measuring latency, pricing, and current load—and routes your query to the optimal source. This means developers can power AI agents, chatbots, or automation tools without juggling multiple keys or worrying about outages, enjoying improved speed and reduced costs automatically.