What is HyperLLM?

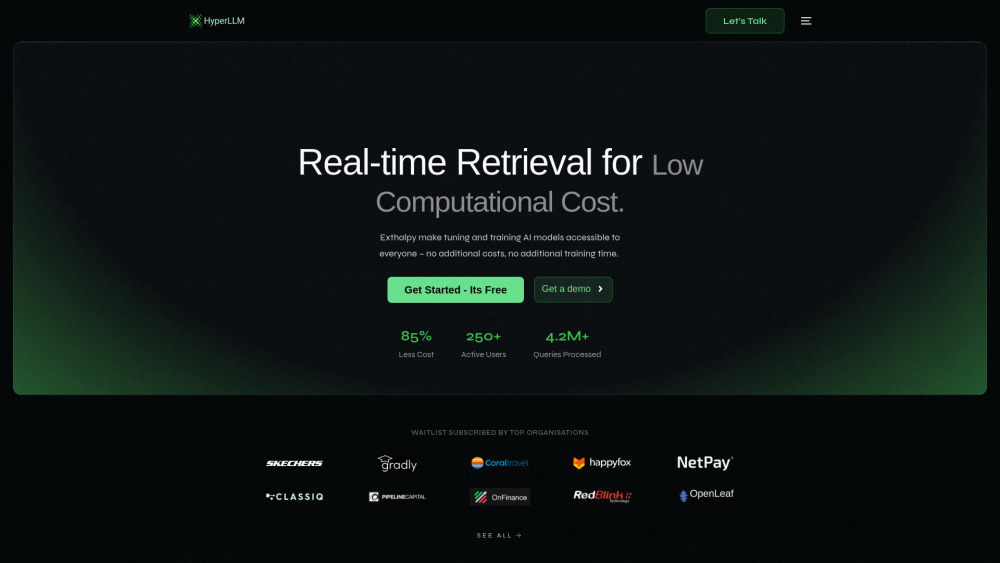

HyperLLM introduces a revolutionary leap in AI efficiency with its Hybrid Retrieval Transformers—compact, intelligent language models designed for ultra-fast adaptation. By combining hyper-retrieval mechanisms with serverless embedding infrastructure, HyperLLM enables real-time model tuning at a fraction of traditional computational costs, delivering performance comparable to large-scale models while reducing expenses by up to 85%.

How to use HyperLLM?

Getting started with HyperLLM is simple and intuitive. Just head over to hyperllm.org, request a live demo, and begin fine-tuning your custom AI models instantly. With no need for extensive training cycles or costly GPU clusters, HyperLLM empowers developers, startups, and enterprises alike to deploy high-performance language models quickly and affordably.